Turning Strengths into Weaknesses: A Certified Robustness Inspired Attack Framework against Graph Neural Networks

Abstract

- GNN have achieved state-of-the-art performance in many graph tasks

- However, GNNs are vulnerable to test-time evasion and training-time poisoning attacks

- Certified robustness is used to defend adversarial attacks, but authors use its properties to attack

- For a node with larger certified robustness, it is more robust to graph perturbations. So nodes with smaller certified robustness are more easy to attack.

- Contribution : Design a certified robustness inspired attack loss, and produces its counterpart.

Introduction

Background

The development of GNNs : many methods on GNNs have achieved state-of-the-art

Attacks : GNNs are vulnerable to test-time graph evasion attacks and training-time graph poisoning attacks

- Graph Evasion : Given a learned GNNs model and a clean graph, attacker perturbs the graph structure to make model make mistakes

- Graph Poison : Given a GNN algorithm and a graph, attacker perturbs the graph structure in the training phase so that the model make mistakes in test-time

Contribution / FrameWork

generalize randomized smoothing and derive the node’s certified perturbation size, which inspire us to focus more on disrupting nodes with smaller certified perturbation size

Design a certified robustness inspired attack loss : modify the weights for different nodes, which means nodes with smaller certified robustness would be assigned larger weight while the larger nodes would be assigned smaller weight. So that more perturbation budget would be allocated to these large weighted nodes.

Design certified robustness attack inspired attack framework that only modify the existing attack loss with certified perturbation size defined node weights. So that any attack existing evasion and poison method could regarded as the base attack of the framework

Background and Preliminaries

GNN : node classification

$\mathcal V$ : nodes set

$u \in \mathcal V$ : node in nodes set

$\varepsilon$ : edges set

$(u,v) \in \varepsilon$ : edge in edges set

$G = (\mathcal V,\varepsilon)$ : graph

$A \in {0,1}^{|\mathcal V|\times|\mathcal V|}$ : adjacency matrix

$\mathcal Y$ : nodes’ labels set

$y_u \in \mathcal Y$ : the label of node u

$\mathcal V_{Tr},\mathcal V_{Te}$ : the train / test nodes set

$\mathcal A$ : the algorithm of GNN

$f_\theta = \mathcal A(A,\mathcal V_{Tr}) : G(A)\rightarrow \mathcal Y^{|\mathcal V|}$ : the node classifier with its parameters $\theta$, training with graph and training nodes

Loss function : $\min_\theta \mathcal L(f_\theta,A,\mathcal V_{Tr}) = \sum_{u\in \mathcal V_{Tr}} l(f_\theta,A,y_u)$

Adversarial Attacks to GNNs

$\delta \in {0,1}^{|\mathcal V|\times|\mathcal V|}$ : perturbations, the element $\delta_{s,t}$ means change the status of edge $(s,t)$

$A \oplus \delta$ : adjacency matrix of graph make XOR with perturbations

$\Delta \ge |\delta|$ : perturbations budget

evasion attack

The attacker aims to maximize the loss follows :

$$

\max_{\delta} \sum_{v \in \mathcal V_{Te}} \mathbf 1[f_\theta(A\oplus\delta;v)\ne y_v],s.t. |\delta|\le \Delta

$$

Due to the problem above is challenging to solve, optimize the loss below :

$$

\max_\delta \sum_{v\in \mathcal V_{Te}} l(f_\theta(A\oplus\delta;v)\ne y_v),s.t. |\delta|\le \Delta

$$

poison attack

The attacker aims to solve the bilevel optimization problem :

$$

\max_{\delta} \sum_{v \in \mathcal V_{Te}} \mathbf 1[f_\theta(A\oplus\delta;v)\ne y_v]\

s.t. \theta = \arg \min_\theta \sum_{u \in \mathcal V_{Tr}}\mathbf 1[f_\theta(A\oplus\delta;u)\ne y_u],|\delta|\le \Delta

$$

In practice, the label of test set is unavailable during training. As a result, the optimization problem above can’t solve and it is hard to solve in fact. Consequently, we optimize the problem below instead.

$$

\max_{\delta} \sum_{v \in \mathcal V_{Tr}} l[f_\theta(A\oplus\delta;v)\ne y_v]\

s.t. \theta = \arg \min_\theta \sum_{u \in \mathcal V_{Tr}} l[f_\theta(A\oplus\delta;u)\ne y_u],|\delta|\le \Delta

$$

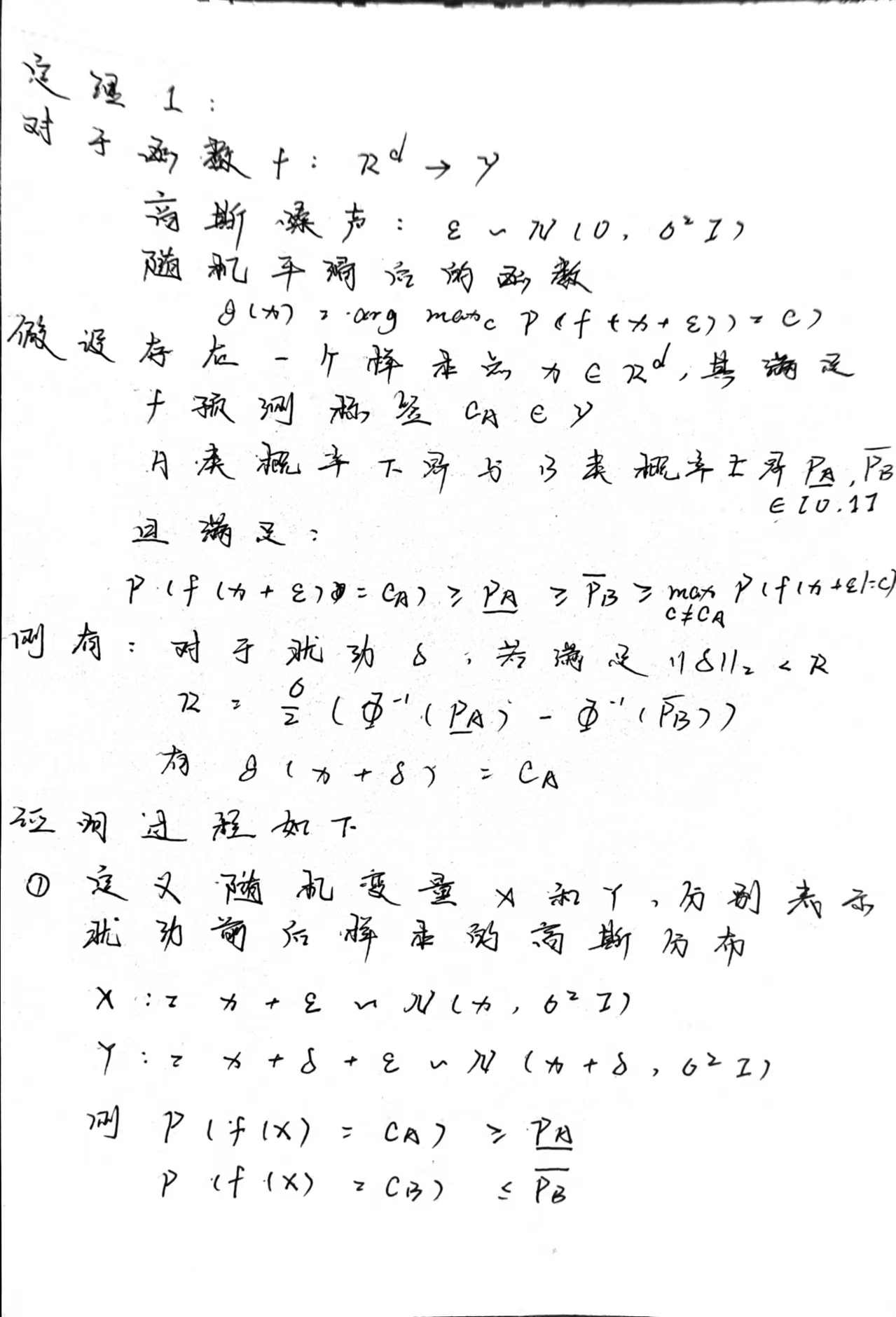

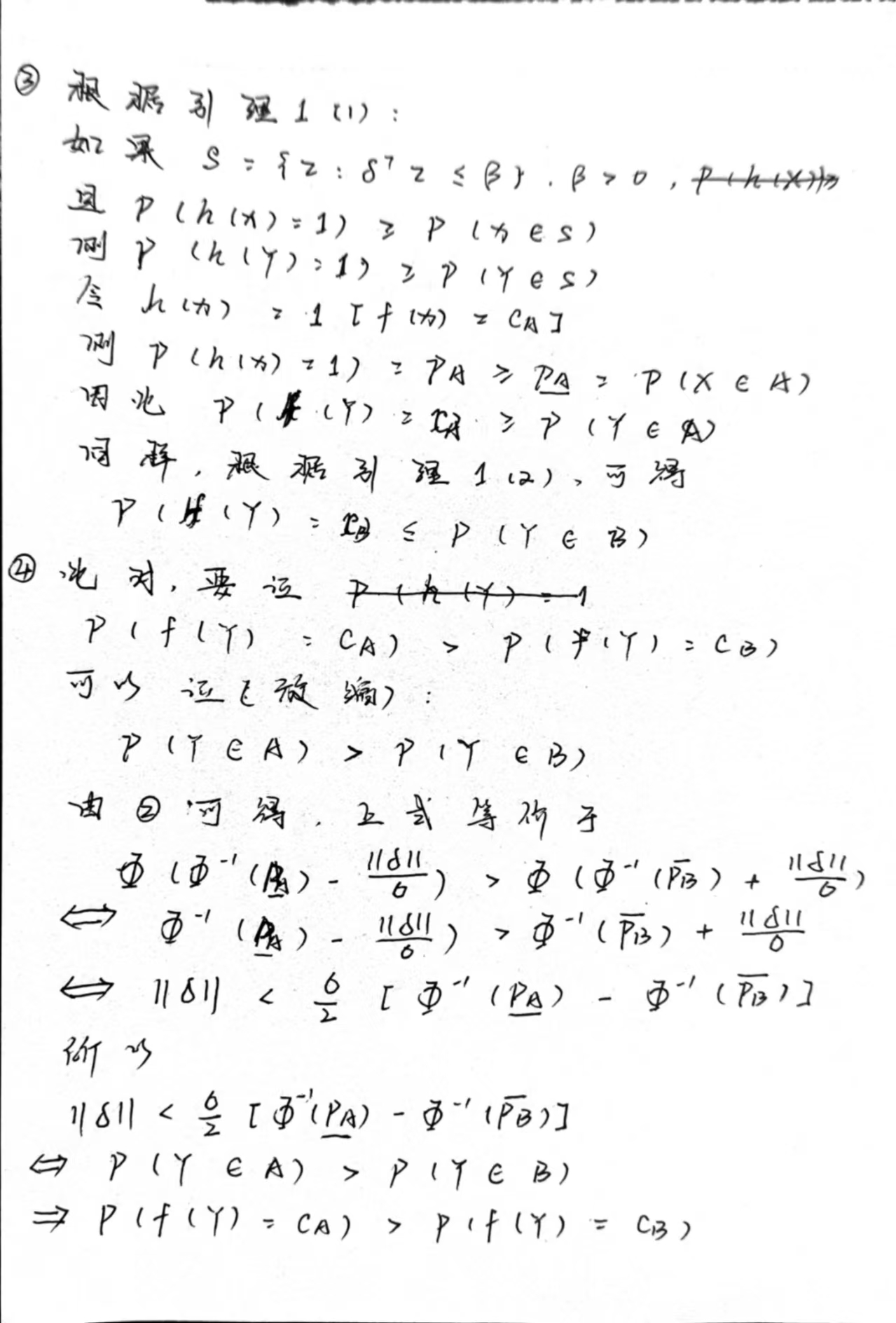

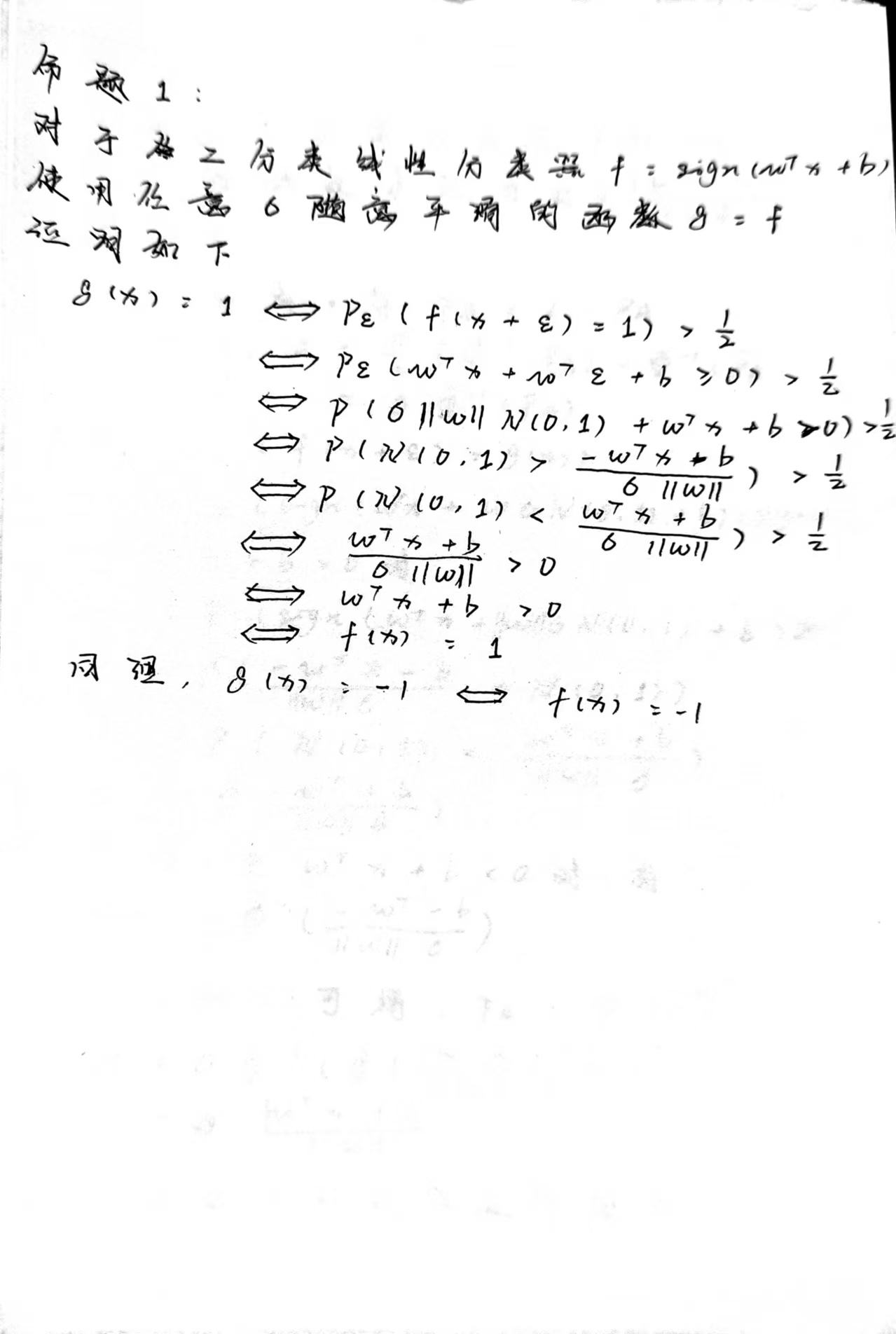

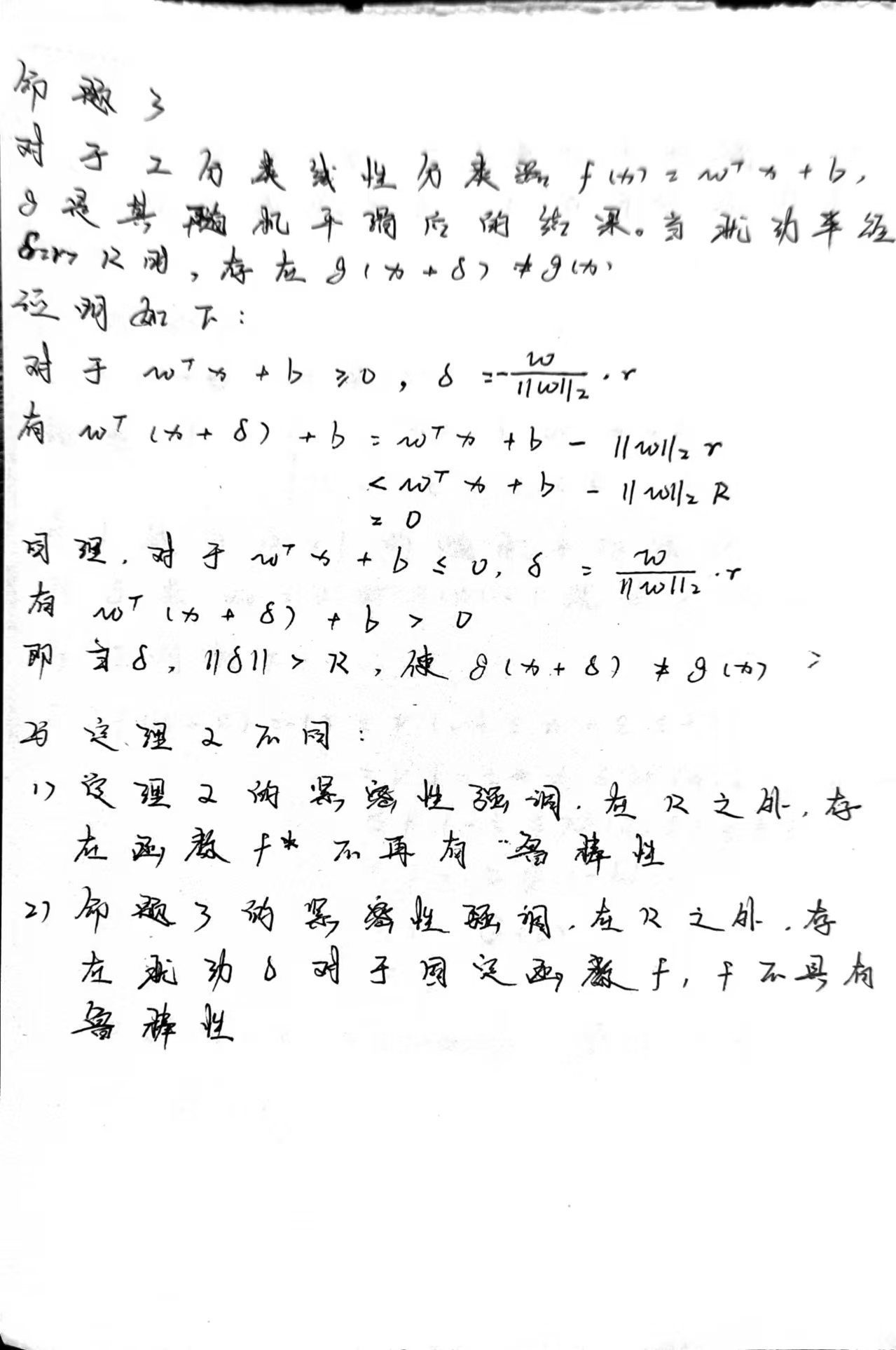

Certified Robustness to Graph Evasion Attacks

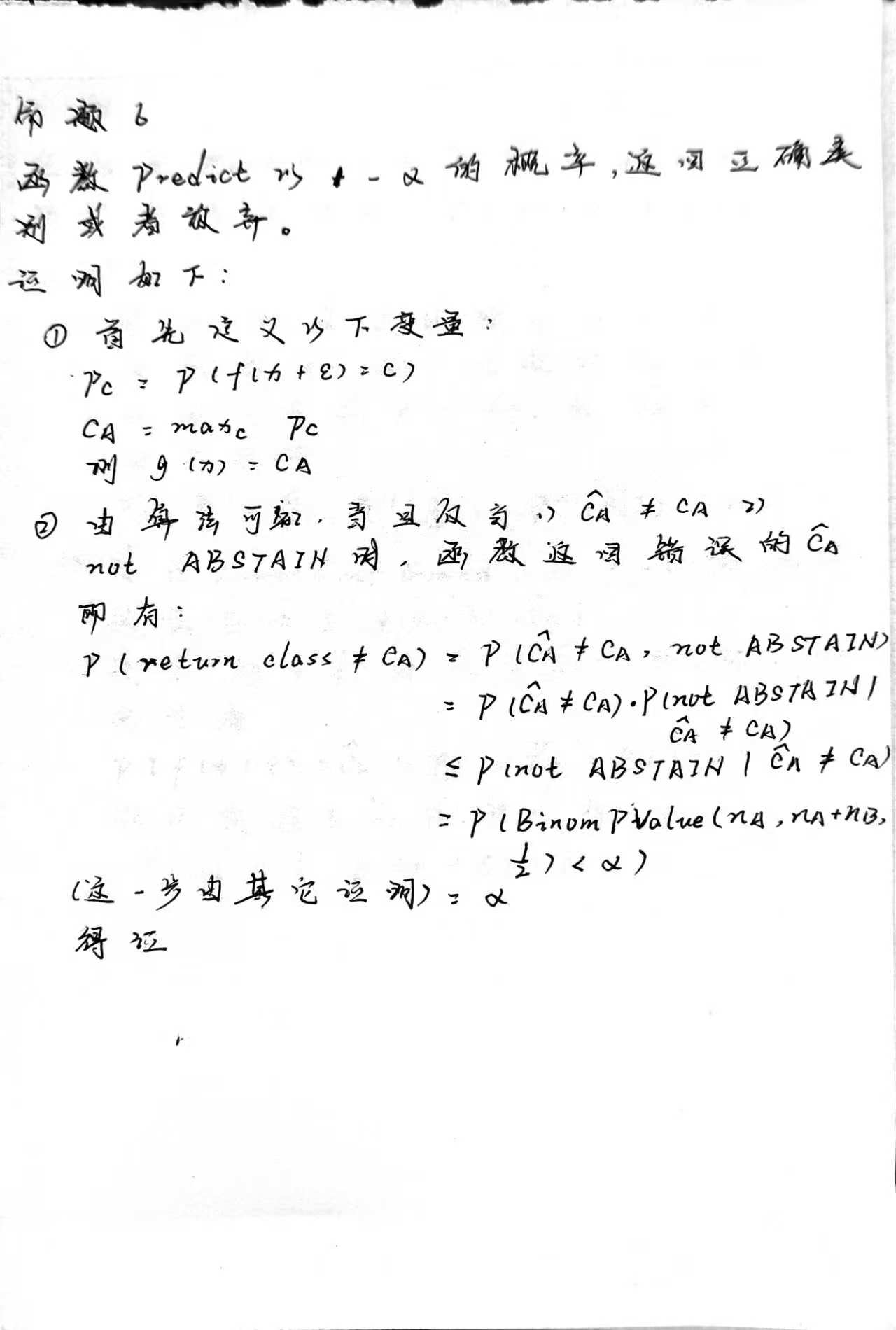

The random smoothing that defends against graph evasion attacks to GNNs consists of the three parts :

construct smoothed node classifier

Given base classifier $f$, graph $G$, and test node $u$ with its label $y_u$. Then randomized smoothing node classifier g as follow :

$$

g(A;u) = \arg \max_{c\in \mathcal Y}Pr(f(A\oplus \varepsilon;u)=c)\

Pr(\varepsilon_{s,t}=0) = \beta,Pr(\varepsilon_{s,t}=1) = 1-\beta,

$$

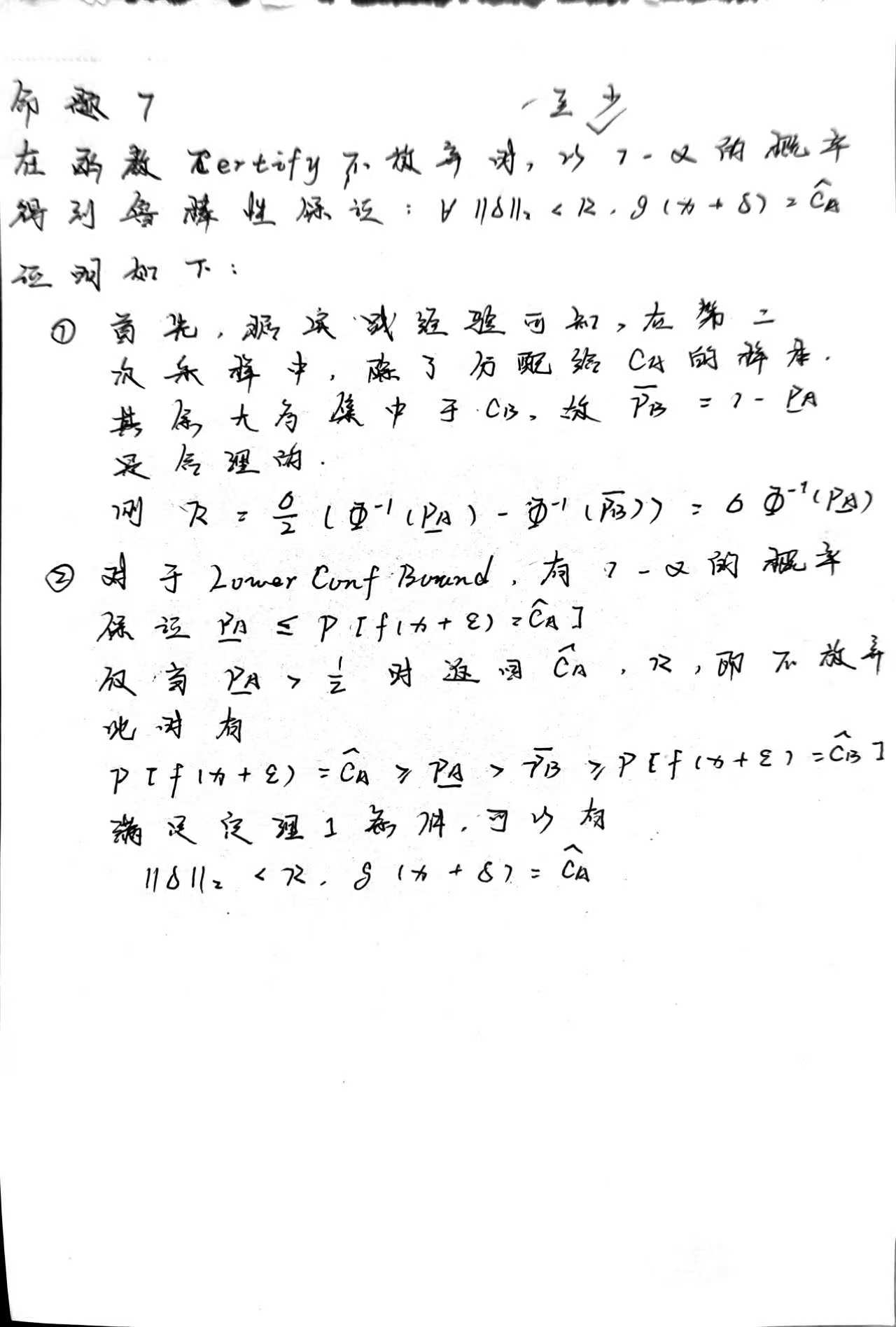

Deriving the certified robustness of graph evasion attacks of GNNs

$g(A;u)=y_u$ means $g$ predicts correctly the label of u. Then $g$ provably predicts the correct label for $u$ if $\delta$ is bounded.

$$

g(A\oplus \delta;u)=y_u,\forall \delta|\delta|0\le K(\underline{p{y_u}})

$$

$\underline{p_{y_u}}$is the lower bound of the probability that f predict the correct label of u on the noisy $\varepsilon$

$K(\underline{p_{y_u}})$ is the certified robustness of the node u.

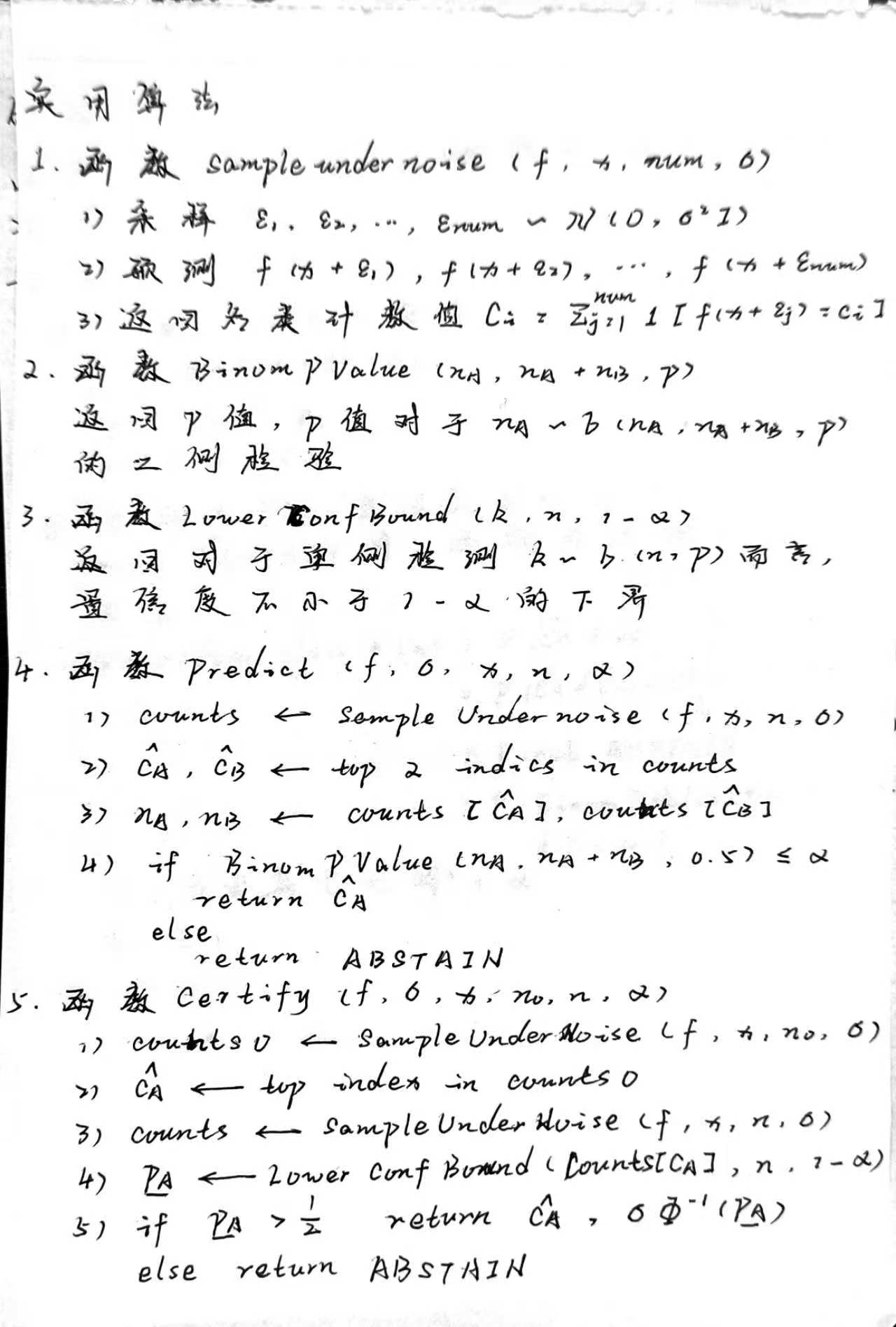

Computing the certified perturbation size in practice

1)sample perturbations $\varepsilon^1,\varepsilon^2,…,\varepsilon^{n}$ from the distribution $Pr(\varepsilon_{s,t}=0) = \beta,Pr(\varepsilon_{s,t}=1) = 1-\beta$

2)add perturbations to $A$ and get $A\oplus \varepsilon^1,A\oplus \varepsilon^2,…,A\oplus \varepsilon^n$

3)use f to predict $u$’s label and counts the numbers of each label by $N_c = \sum_{j=1}^N \mathbb I(f(A\oplus \varepsilon,u)=c),c\in \mathcal Y$

4)compute $\underline{p_{y_u}} = B(\alpha,N_{y_u},N-N_{y_u}-1)$, where $1-\alpha$ is confidence level and $B(\alpha,a,b)$ is the $\alpha$-th quantile of Beta distribution with shape parameters a and b.

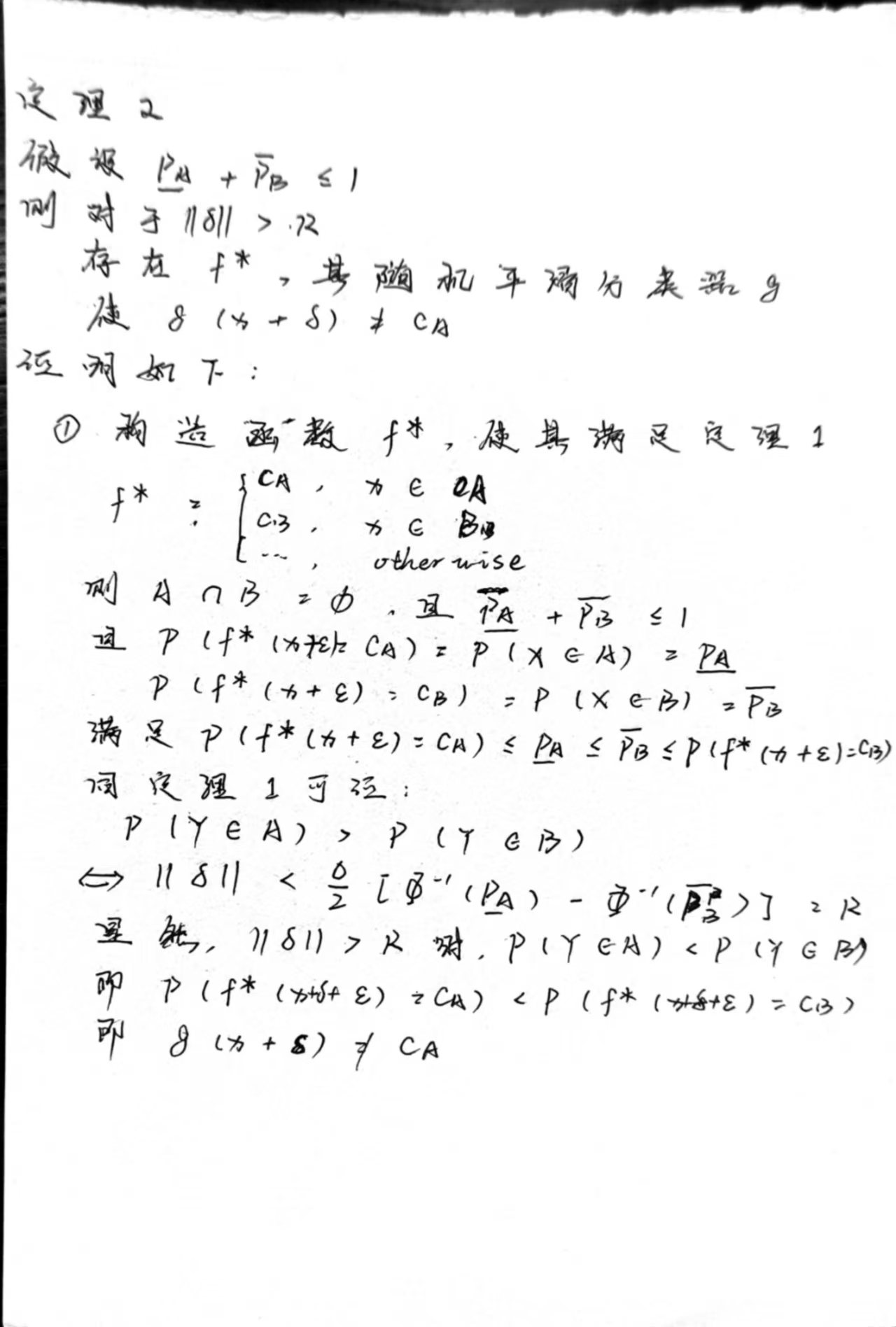

Certified Robustness to Graph Poisoning Attacks via randomized smoothing

Main Idea

extend randomized smoothing from the classifier to a general function

Building base function

Define $\widetilde f(A,\mathcal V_{Tr};v)$ as the composition of 1) training the model with graph $G(A)$, algorithm $\mathcal A$ and training nodes set $\mathcal V_{Tr}$ ; 2) test the model with node $v$

View $\widetilde f$ as the base function.

Construct a smoothed function

Define the randomized smoothed function as follow :

$$

\widetilde g(A,\mathcal V_{Tr};v) = \arg \max_{c\in \mathcal Y} Pr(\widetilde f(A\oplus \epsilon,\mathcal V_{Tr};v)=c)

$$

where

$\epsilon \in {0,1}^{|\mathcal V|\times |\mathcal V|}$ : noise matrix whose element $\epsilon_{s,t}$ drawn from discrete distribution

Deriving the certified robustness of graph poisoning attacks of GNNs

$$

\widetilde g(A\oplus \delta,\mathcal V_{Tr};v)=y_v,\forall |\delta|0\le K(p{y_v})

$$

computing the certified perturbation size in practice

Given : algorithm $\mathcal A$, graph $G(A)$, training nodes $\mathcal V_{Tr}$, and discrete distribution $Pr(\varepsilon_{s,t}=0) = \beta,Pr(\varepsilon_{s,t}=1) = 1-\beta$, and a test node $v$

Steps :

- sample $N$ random noise matrices $\epsilon^1,…,\epsilon^N$ from the discrete distribution given

- add noise matrix to adjacency matrix and get $A\oplus\epsilon^1,…,A\oplus \epsilon^N$

- train the classifiers $\widetilde f^1 = \mathcal A(A\oplus\epsilon^1,\mathcal V_{Tr},…,\widetilde f^N = \mathcal A(A\oplus\epsilon^N,\mathcal V_{Tr})$

- use the classifiers predict v’s labels and count the number of all kinds of labels, $N_c = \sum_{j=1}^N\mathbb I(\widetilde f^j(A\oplus\epsilon^j,\mathcal V_{Tr};v)=c),c\in \mathcal Y$

- estimate $\underline{p_{y_v}}$ by $\underline{p_{y_v}} = B(\alpha,N_{y_v},N-N_{y_v}-1)$ and calculate the perturbation size

Certified Robustness Inspired Attack Framework against GNNs

Observation

Observation

A node with a larger(smaller) certified perturbation size should be disrupted with a smaller(larger) number of perturbation edges.

Idea

With a perturbation budget, an attacker should avoid disrupting nodes with larger certified perturbation sizes, but focus on the nodes with smaller perturbation size.

Questions and Measures

- How to get the perturbation sizes ? : derived node’s certified perturbation size

- How to allocate the budget for the nodes ? : a certified perturbation inspired loss

- How to generate the perturbation ? : certified robustness inspired attack framework

Certified Robustness Inspired Loss Design

Naive solution and its weakness

Solution : sorts all nodes’ certified robustness sizes in an ascending order and perturbs them one-by-one until reaching the budget.

Weakness : computationally intensive and suboptimal

Idea

The loss functions in the poison and evasion are defined for each nodes. So that, assign each node with a weight which associated with its certified perturbation. The loss function as follow :

$$

L_{CR}(f_\theta,A,\mathcal V_T) = \sum_{u \in V_T} w(u)\cdot \mathcal l(f_\theta(A;u),y_u)\

w(u) = \frac{1}{1+\exp(a\cdot K(\underline{p_{y_u}}))}

$$

where $a$ is super parameter

Certified Robustness Inspired Attack Design

certified robustness inspired evasion attacks to generate graph perturbations

For any base attack, only modify the loss by multiplying it with certification perturbation sizes defined node weights.

For instance, use PGD attack. The perturbation should iteratively generates by

$$

\delta = Proj_{\mathbb B}(\delta+\eta \cdot \nabla_\delta \mathcal L_{CR}(f_\theta,A\oplus \delta, \mathcal V_{Te})\

\mathbb B={\delta:1^T\cdot \delta\le \Delta,\delta\in[0,1]^{|\mathcal V|\times\mathcal V|}}\

Proj_{\mathbb B}(a) = \left{ \begin{array}{rcl}

\Pi_{[0,1]}(a-\mu1),1^T\Pi_{[0,1]}(a-\mu1)=\Delta\

\Pi_{[0,1]}(a),1^T\Pi_{[0,1]}(a-\mu1)\le\Delta

\end{array}\right.

$$

certified robustness inspired graph poisoning attacks to generate graph perturbations

$$

\max_\delta \mathcal L_{CR}(f_\theta*,A\oplus\delta,\mathcal V_{Tr})\

s.t. \theta^* = \arg\min_{\theta} \mathcal L_{CR}(f_\theta,A\oplus\delta,\mathcal V_{Tr}),|\delta|\le \Delta

$$

问题:

- 将随机平滑从分类器扩展到一般函数,似乎不是很明显?